Technological aspects of the gaze estimation project

To create a solution that exceeds the state-of-the-art technology, we had to use the available resources to the maximum. The crucial part of the process was to create a stable foundation that would allow the creation and development of new features that would bring it closer to the final project and what it should look like.

- We applied Python, PyTorch and OpenCV, among other libraries, to create the base algorithm. We later based the development on testing data from early tests and larger data gathered via the ClickWorker crowd-sourcing platform.

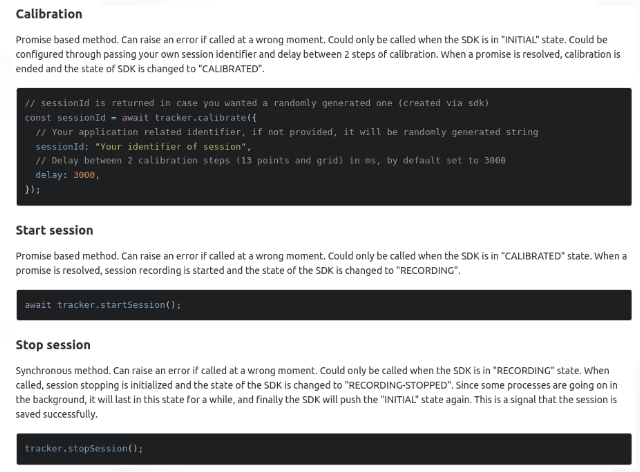

- JavaScript was used to develop WETSDK, Training Data Collection App (TDCA) and an example web application illustrating the production use case.

- FastAPI was used to develop a communication interface between the end user – the web app and the algorithm running on the backend server

- AWS allowed us to store the training and validation data in the cloud

- Docker made it easier to encapsulate the algorithm in self-contained SW images that can run in the cloud

Due to its complexity and innovation, the project must be divided into multiple stages and requires extensive research, including the “trial and error” approach. The biggest challenges involve the dynamic environment, as we wanted to create a solution that would work “in the wild”, without the need for any specific HW and with minimum prerequisites from the user.

This raised several obstacles, including the complexity of calibrating the phone camera. Phone screens & cameras differ from model to model. Therefore, it’s hard to find a generic estimation method, especially since phone manufacturers don’t disclose the physical dimension of devices.

Since the user needs to have complete freedom to use their phone, there’s the challenge of making sure that the gaze estimation algorithm can be auto-calibrated in different models of smartphones. It is a difficult task given different angles, distances, and face detection capabilities. Our neural networks are trained on different faces and angles to get a result similar to regular use.

Due to the vast crowd-sourced data from the ClickWorker platform, the team needs to evaluate the data quality. An automatic framework was developed to filter out recordings that don’t satisfy basic quality metrics, like proper lighting, lack of blurring, etc.